Pattern recognition (aka pattern classification) is the act of taking in raw data and making an action based on the category of the pattern.

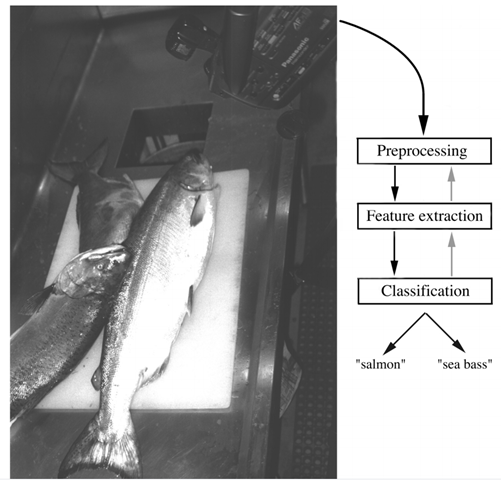

A Classifier Example

Suppose a system (classifier) in a fish packing plant that tries to separate 2 classes of fishes (i.e “Salmon” and “Sea bass”) based some features in each class.

The system will have the following design:

- First a camera captures an image of the fish

- Camera signals are preprocessed to be simplified without losing important information, we might use segmentation to isolate 2 fishes in the same image or isolate a fish from its background in the image.

- The preprocessed signals are then sent to feature extractor which measure some properties “features”

- The extracted features are then sent to a classifier which evaluates it and make a final decision (i.e the sensed fish is salmon or sea bass)

Suppose somebody tells us that the sea bass is generally longer than salmon. Then we understand that model which says that sea bass has some typical length and it is greater than that in salmon. We then need to sense each fish and decide its length and compare it with some critical length l*. To choose l* we need some training samples of different types of fish, make length measurements manually and inspect the results. Finally we get the following histogram.

For sure the result is disappointing because from histogram results we can’t claim that l* is a good feature. We can’t separate salmon from sea bass by length only. As a result we decide to choose a new feature which is the average of light and the critical lightness is x*, we get the following histogram.

Its obvious that the results are much more better, x* now separate sea bass and salmon with less error than when using l*.

Sometimes we shouldn’t assume that the cost of each decision is equally. Some times customers can accept having tasty salmon in a can labeled “Sea bass” while they can’t stand seeing sea bass in their “Salmon” labeled cans. In this case, then we should move our decision boundary to a smaller values of lightning. Which means that the region of salmon (i.e less than x*) will have smaller chance of sea bass while the region of sea bass (i.e more than x*) will have a bigger chance of salmon, which cope with the cost of each classification.

Such results means that there is a single cost associated with our decision and we seek to take the decision which minimize that cost, and this is the mission of Decision Theory which is the parent of Pattern Classification.

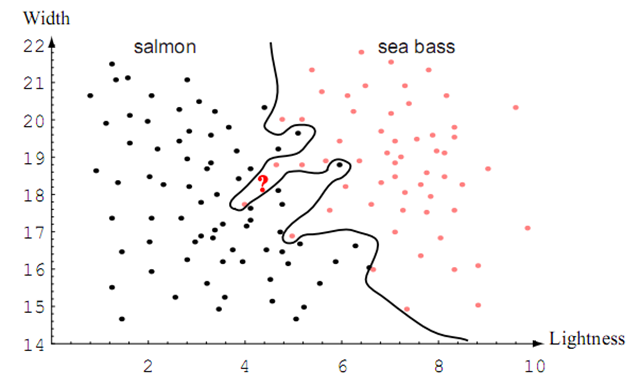

Now we decide to use more than one feature at once to improve the performance of the classifier. We are told that sea bass are wider than salmon. Now we have 2 features to classify a fish and they are: lightness x1 and width x2. This reduces the image of a fish to a vector x = <x1, x2>.

After training our classifier again on 2 features of salmon and sea bass we get the following plot:

Each point represents a fish having lightning x1 and width x2, we draw a line that separates space to 2 regions where any fish fall below it will be classified as salmon and any fish above it will be classified as sea bass. For sure this result is better than the 2 trials before (i.e length alone and lightning alone).

Suppose that there are other more features to use but they are too expensive to measure and we are forced to use the width and lightness, then we need to make our classifier more complex by using a more complex decision boundary rather than the straight line, in this case we would be able to separate the training patterns perfectly.

Using such decision boundary we were able to separate all the fish measures perfectly, but when getting this classifier to work in real life and exposed to novel patterns (i.e new patterns not seen before in the training phase) we shouldn’t not expect from it to give use a right decision. It seems that our complex decision boundary wouldn’t provide a good generalization, it seems to be tuned to a certain training samples rather than based on some underlying characteristic or a true model (i.e model of nature) of all sea bass and salmon.

We might decide to accept the trade off and make our decision boundary as to have a poor performance on the training samples but have a better performance on a novel patterns. The following plot shows such trade off after improving the decision boundary slightly.

Sometimes we wish to use some cost function which when combined with our decision will sometimes lead to an entirely different decision. Sometimes we wish to eliminate damaged fishes to be used as cat food, or separate female and male fish if we are willing to sell caviar. So we see that different decisions may require different decision boundaries and features. and it is obvious that it is very hard to create one general fish classifier to all fish classification problems which differ in decision cost and environment. This should give us appreciation to our brain which is able to rapidly switch between classification tasks. Who created it? it is Allah.

A central technique when training data is insufficient (e.g expensive, rare, etc… ) is to use our knowledge of the problem domain. On method that uses this concept is analysis by synthesis in which one has the model of the class, and can deduce how a pattern is generated.

Related Fields

- Image Processing: used in the preprocessing and feature extraction phase as mentioned.

- Regression: seeks to find some functional description of data, often with the goal of predicting values for new input. The most popular form of regression is linear regression in which the function is linear in the input variable.

- Interpolation: seeks to find the function of intermediate values in a given range.

- Density estimation: seeks to find the probability that a member of a certain category will be found to have particular features.

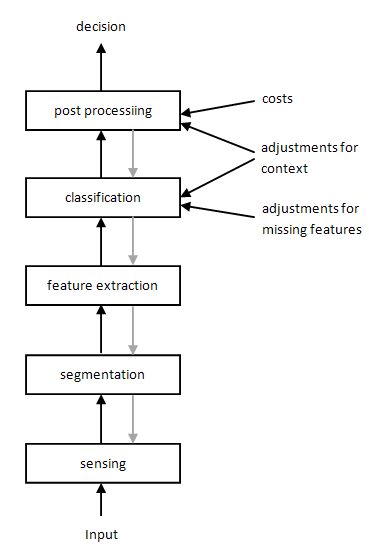

Pattern Recognition Systems

The following figure show the components of a typical pattern recognition system and how this components relate.

We need understand the problem that each component tries to solve.

- Sensing

- The input to our classification system is take through a transducer which in our case was a camera. The quality of the information in the input may depend on characteristics in the camera such as its resolution, bandwidth, sensitivity, etc…

- Segmentation and Grouping

- In our fish example we assumed that each fish is alone on the conveyor belt, and it can be isolated from its background, but what about if there are more than on fish in the same image and they overlapping? How we can isolate each fish? we can use segmentation.

- Imagine a OCR system that recognize character “i” or symbol “=”, each of which is a composite object. we as human easily recognize such patterns. but consider this word BEATS, why don’t we read its such as BE, BEAT, EAT, AT and EATS ? If we read B why don’t we recognize P or I, the character B consists of the parts of both characters.

- The problem of determining subsets and supersets is part of a science known as mereology.

- Feature Extraction

- The task of feature extractor is to characterize an object to be recognized by measures close to one in the same class and far and different measures to object in different class. This leads to the idea of distinguishing features that are invariant to irrelevant transformations.

- We want to choose features in the fish classification system that don’t get affected if the fish is rotated, translated on the conveyer belt or the size of the fish, any property that define the shape and color are invariant to such transformations.

- We want also to choose features that are invariant to the distance from which the camera take a picture to a fish. If the distance between the object and the camera can change then the image is subject to projective distortion.

- Also we want features that are invariant to translation in time and in overall amplitude. In speech recognition we want features to invariant to the rate by which people talk.

- The problem of feature extraction is much more problem and domain dependant than classification.

- Classification

- The task of classifier component is to use the feature vector provided by the feature extractor to assign the object to a category. Because perfect classification is impossible, the classifier task will determine the probability that an object belongs to each class.

- The difficulty arise in the difference between feature values of objects in the same category and objects in other category. This variability is due to complexity or due noise which is defined as any property of sensed pattern which is not due to the true underlying model but instead to randomness in the world or the sensors.

- Post Processing

- A classifier is used to recommend actions, each action has an associated cost. The post-processor uses the output of the classifier to decide on the recommended action.

- Classifier performance is measured by the percentage of new patterns assigned to wrong categories, however it would be better to recommend actions that will minimize overall cost (i.e minimize the risk).

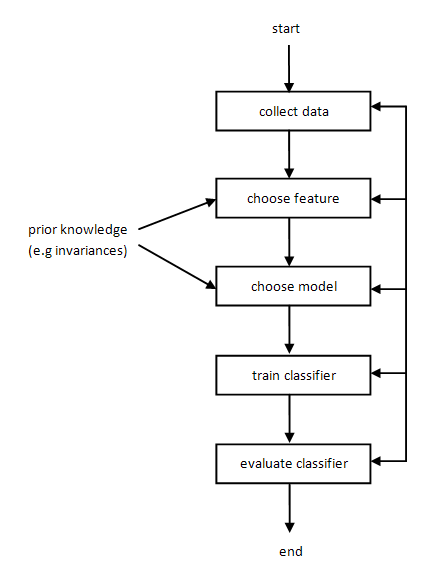

Pattern Recognition System Design Cycle

- Data Collection: Account for a large cost of the system development, the usage of a small sample of data can be used for system feasibility study, but the more the sample data the better performance our system will have.

- Feature Choice: The choice of the distinguishing features is a critical design step and depends on the characteristics of the problem domain.

- Model Choice

- Training: The process of using data to determine the classifier is referred as training the classifier.

- Evaluation: It is important to measure the performance of the system and to identify the need for improvements in its components.

- Computational Complexity: We may ask how an algorithm scales as a function of the number of features dimensions or the number of patterns.

Learning and Adaptation

- Supervised Learning

- The training data is analyzed to infer a function, the inferred function should predict the output given any valid input.

- This requires algorithms that generalize from from training data to unseen situations (i.e novel patterns).

- Unsupervised Learning (aka Clustering)

- There is no explicit teacher and the system forms clusters or “natural groupings” of the input patterns.

- Reinforcement Learning

- The system is rewarded for good classifications and punished for bad ones.

- The system learns with a critic, the only teaching way is whether the classification the system did was right or wrong. The system can’t know why its classification was wrong. There is no specific feedback.

References

Pattern Classification (2nd Edition) by Richard O. Duda,Peter E. Hart,David G. Stork.